At Digital Masterpieces, we build apps that create impressive artworks from digital photos and videos. For that, we use the device’s graphics processor to transform the media that you load into the apps. The component at the heart of our apps that is responsible for transforming images and videos into pieces of art is our Rendering Core. Over the last few months, we have been working hard re-writing this critical component, incorporating the latest trends in image processing technology.

With our new Rendering Core, we replaced our old core that was still based on the old rendering library OpenGL. The very first implementation of the old Core started almost 10 years ago based on an idea from a student project, and it was high time for an upgrade. For once, OpenGL is now deprecated on macOS and won’t get any new features on all of Apple’s platforms. It also posed many technical limitations like image size (we could not export images larger than 16 mega pixels), and couldn’t keep up with the newest performance improvements brought by Apple’s own graphics frameworks.

The new Core is built upon Apple’s image processing framework Core Image, which uses the high efficiency rendering framework Metal under the hood. This meant a lot of work, since we had to port all of our filters and effects to Metal to seamlessly integrate them with Core Image. That means re-writing our 73 custom filters, resulting in 10,630 new lines of code. In total, our new Core contains approx. 30k lines of code, coming from 4,300 single commits from our devs. To keep everything smooth and stable, we wrote 674 unit and integration tests, resulting in an additional 7.5k lines of code.

The testing is especially important, since our image processing filters are very complex. Their complexity mainly results from our attempt to model the many different phenomena of artistic media, such as contour lining, varying levels of detail, color blending, smudging, etc. – with many aspects also being controllable by the user.

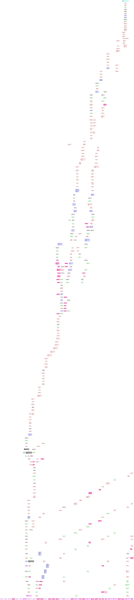

From a user perspective, an effect is just a black box that takes an input image and generates an output image. Technically, it is mush more complex and there are many more processing steps (see boxes of the processing graph) involved that sum up to a large amount of operations on millions of pixels. For example, applying the Oil Pen preset from our Graphite app to an image will internally run the image through 378 different filtering stages. In total, 952,106,944 pixels need to be processed when stylizing a standard 12 MP iPhone photo. With our new Rendering Core, this process only takes 6.5 seconds on an iPhone 14 Pro. This gives us the opportunity to increase the maximum export resolution to 64 MP, while still keeping processing times reasonable.

Our new Core has a lot of tricks up its sleeve to boost performance and efficiency, effectively using concepts and technology of Apple’s Core Image framework:

Culling

Culling is the process of determining the currently visible part of an image, and culling away all unneeded pixels, so that only the relevant pixels get processed. That means, when the image was cropped, the pixels that got cut won’t be processed by the Rendering Core on the GPU. While this seems like an obvious and straight-forward optimization step, it’s not trivial to implement. Since all editing steps can be reverted (including cropping), we need to make sure that any changes that happened outside the cropped area are preserved – including retouching and deformations.

Concatenation

Core Image can concatenate multiple processing steps into one program that is executed on the GPU. This increases efficiency, since loading and executing programs take time, so having fewer but slightly more complex programs is usually beneficial to reduce execution time. Using this technique, the complex Graphite pipeline graph above can be reduced from 378 down to 77 processing steps. While this is still a big number, it’s also a huge reduction of overhead on the GPU and thus a huge performance gain.

Caching

Our effects consist of many different filter stages – conceptual units that take an input image and generate an (intermediate) output image by applying one or more processing steps. During processing, each filter stage changes the image a bit more towards the final result, which means there are a lot of temporary “partially-processed” intermediate images needed.

Using our app’s UI, users can change parameters such as Details or Contour Thickness, effectively re-configuring one or more filter stages in the complex filter pipeline. To reflect this change in the app, the Rendering Core has to generate a new output image by executing every filter stage again. Since our processing graph is directed and acyclic, and since we know what stages had been re-configured, we also know all stages that come before the changing processing steps. These filter stages won’t be affected by the changed parameter, and thus they produce the same result. Our new Core uses this knowledge and caches intermediate images of stages automatically based on usage, processing time, and available resources. This way, we can avoid unnecessary filter execution. Only the required filters are executed again when changing parameters, resulting in very fast UI feedback during editing. You can actually experience the impact of caching in Graphite by changing the Depth and the Contours parameters. Retouching Contours will be much smoother since we can re-use more intermediate results.

Tiling

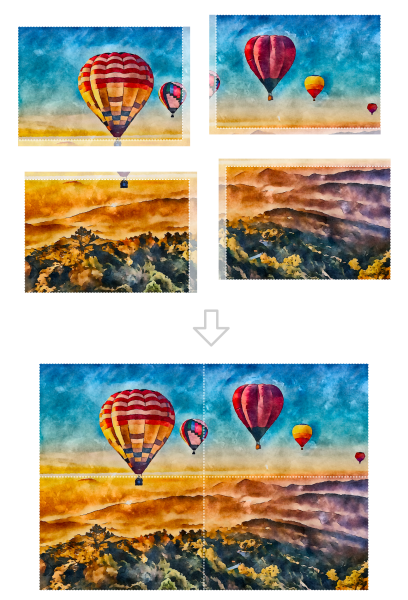

Due to technical limitations in OpenGL, our old Core could only process images with up to 16 megapixels. With the new Core, we decided to quadruple this size, processing images with up to 64 megapixels directly on the iPhone, iPad, or Mac. Since the filter pipeline is very complex with many intermediate results, processing such large images directly would exceed the available memory on most mobile devices.

To resolve this, the new Core supports tiling: During processing, the image is sliced into multiple smaller tiles. Each tile is processed on its own, and in the end, the processed tiles are stitched together into a full image again. The challenge here is to ensure that the tiles perfectly match at the borders so that no visible “seams” remain. The trick is to add a small margin to the tiles around the border that gets processed as well and that will be cut later when gluing the tiles together again. This guarantees seamless transitions between the tiles. Thankfully, Core Image offers native tiling support, and we “only” had to do the math and adapted all of our filters to support that. One of the major challenges was to also get our ML-based style transfer filters working with tiling. For this, we needed to create custom neural network layers and split the execution of the ML networks input multiple passes.

Resolution Independence

Another challenge that comes with large export sizes is what we call resolution independence. It means that our effects look consistent between the (lower-resolution) preview and the high-res export, or between a 4 MP and a 64 MP export. For this, we adjust or scale our effects together with the image resolution. Scaling usually means that much more pixels need to be analyzed on higher resolutions, i.e., the so-called kernel sizes increases. Since Metal is very efficient and allows for much larger kernel sizes than OpenGL, it enabled us technically to now offer these high resolution options without compromising image quality.

The new export resolutions beyond 12 MP together with the very large kernel sizes posed new conceptual and technical challenges we successfully tackled. We redesigned some of our custom filters to scale better with the different resolutions and implemented optimizations to reduce processing time for very large filter kernels, while still ensuring high quality rendering.

Resolution Independence

Another challenge that comes with large export sizes is what we call resolution independence. It means that our effects look consistent between the (lower-resolution) preview and the high-res export, or between a 4 MP and a 64 MP export. For this, we adjust or scale our effects together with the image resolution. Scaling usually means that much more pixels need to be analyzed on higher resolutions, i.e., the so-called kernel sizes increases. Since Metal is very efficient and allows for much larger kernel sizes than OpenGL, it enabled us technically to now offer these high resolution options without compromising image quality.

The new export resolutions beyond 12 MP together with the very large kernel sizes posed new conceptual and technical challenges we successfully tackled. We redesigned some of our custom filters to scale better with the different resolutions and implemented optimizations to reduce processing time for very large filter kernels, while still ensuring high quality rendering.

This was a small look into the heart of our apps, our new Rendering Core. We are very happy with our re-write of the new Core as we added many new features such as tiling, culling and caching under the hood that give our users an extra in resolution, rendering speed, and fidelity. Since the Rendering Core is now based on Apple’s proven and optimized Core Image and Metal frameworks, our effects run very efficient, effective, and stable on Apple’s hardware. We are amazed how even older devices, such as the iPhone 6s, can now process images in very high resolution in reasonable time.

In addition, it is now fairly easy to integrate other image processing technologies like Core ML into our pipelines, which will allow us to add new technologies faster and with much less effort in the future, resulting in even more benefits for our users. This also means that our technology can now be integrated much more easily in other iOS and macOS development projects. If you are interested in using our technology, feel free to contact us!

We are excited and are looking forward to all the new features we are now able to build with our new Core. Stay tuned for many more updates and check out our apps on the App Store!

Digital Masterpieces is home to the BeCasso App Family, consisting of BeCasso, Graphite, Clip2Comic, Oilbrush, Waterbrush and ArtCard.